what are the three mapping poilcies of memory to cache

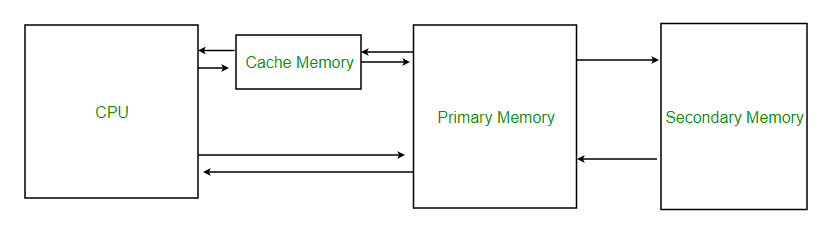

Cache Memory in Computer Arrangement

Enshroud Retention is a special very high-speed memory. Information technology is used to speed upward and synchronizing with high-speed CPU. Enshroud memory is costlier than main retentivity or deejay memory but economic than CPU registers. Cache memory is an extremely fast retentivity blazon that acts as a buffer betwixt RAM and the CPU. Information technology holds frequently requested data and instructions then that they are immediately available to the CPU when needed.

Enshroud retention is used to reduce the average time to access data from the Chief memory. The enshroud is a smaller and faster retentiveness which stores copies of the data from frequently used main memory locations. There are diverse dissimilar independent caches in a CPU, which store instructions and data.

Levels of memory:

- Level 1 or Annals –

It is a type of retentiveness in which data is stored and accustomed that are immediately stored in CPU. Almost ordinarily used annals is accumulator, Plan counter, address register etc. - Level 2 or Cache memory –

It is the fastest retentiveness which has faster access time where information is temporarily stored for faster admission. - Level iii or Main Memory –

It is retention on which computer works currently. Information technology is minor in size and once power is off data no longer stays in this memory. - Level 4 or Secondary Memory –

It is external memory which is not as fast every bit main memory but information stays permanently in this memory.

Cache Performance:

When the processor needs to read or write a location in master retention, information technology showtime checks for a respective entry in the cache.

- If the processor finds that the memory location is in the cache, a enshroud hit has occurred and data is read from enshroud

- If the processor does not find the memory location in the enshroud, a cache miss has occurred. For a enshroud miss, the cache allocates a new entry and copies in data from principal memory, then the asking is fulfilled from the contents of the cache.

The operation of cache memory is frequently measured in terms of a quantity called Hit ratio.

Hit ratio = hit / (hit + miss) = no. of hits/full accesses

We can improve Cache performance using higher cache block size, higher associativity, reduce miss rate, reduce miss punishment, and reduce the time to striking in the enshroud.

Cache Mapping:

In that location are iii dissimilar types of mapping used for the purpose of cache retentivity which are as follows: Direct mapping, Associative mapping, and Set-Associative mapping. These are explained beneath.

- Straight Mapping –

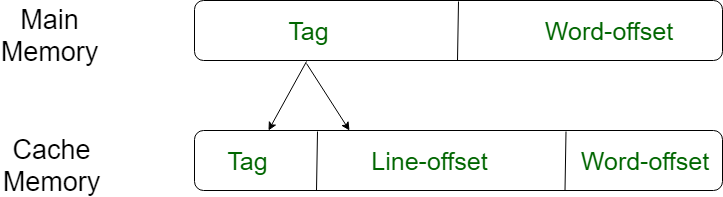

The simplest technique, known every bit direct mapping, maps each block of master memory into only one possible cache line. or

In Straight mapping, assign each retentiveness block to a specific line in the cache. If a line is previously taken up past a memory cake when a new block needs to be loaded, the former block is trashed. An address space is carve up into two parts index field and a tag field. The cache is used to store the tag field whereas the rest is stored in the main memory. Direct mapping`south performance is directly proportional to the Hitting ratio.i = j modulo m where i=cache line number j= main memory block number m=number of lines in the cache

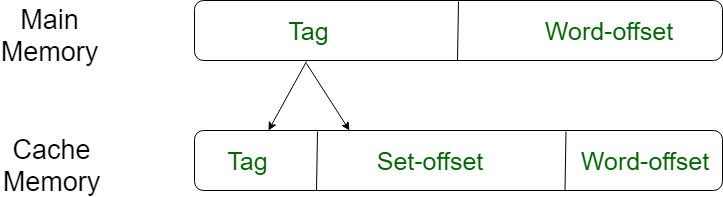

For purposes of cache access, each principal retention accost can exist viewed equally consisting of three fields. The to the lowest degree meaning w bits place a unique word or byte within a block of chief memory. In virtually contemporary machines, the address is at the byte level. The remaining s $.25 specify i of the 2southward blocks of main retention. The cache logic interprets these southward bits equally a tag of southward-r bits (most pregnant portion) and a line field of r $.25. This latter field identifies one of the m=2r lines of the enshroud.

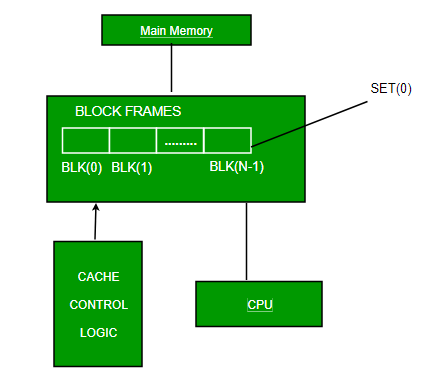

- Associative Mapping –

In this type of mapping, the associative memory is used to store content and addresses of the memory word. Any cake can get into any line of the cache. This means that the word id bits are used to identify which word in the block is needed, but the tag becomes all of the remaining bits. This enables the placement of any word at any place in the cache retentivity. It is considered to be the fastest and the virtually flexible mapping form.

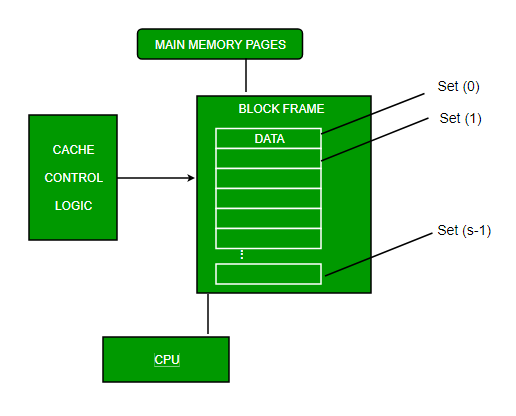

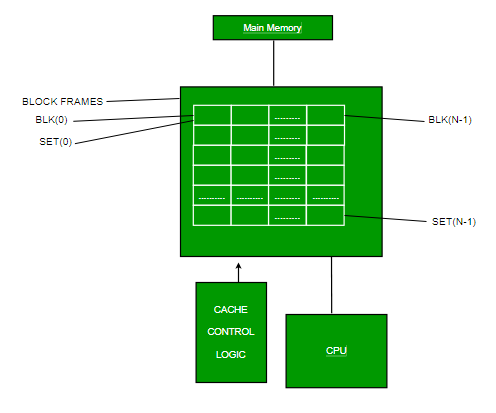

- Gear up-associative Mapping –

This class of mapping is an enhanced form of direct mapping where the drawbacks of direct mapping are removed. Set associative addresses the problem of possible thrashing in the straight mapping method. It does this by saying that instead of having exactly one line that a block tin map to in the enshroud, we will group a few lines together creating a set . Then a block in memory tin can map to any i of the lines of a specific set..Gear up-associative mapping allows that each word that is nowadays in the cache can accept 2 or more words in the main retention for the aforementioned index address. Set associative cache mapping combines the all-time of straight and associative cache mapping techniques.In this example, the enshroud consists of a number of sets, each of which consists of a number of lines. The relationships are

m = v * one thousand i= j mod 5 where i=cache set up number j=chief memory cake number five=number of sets k=number of lines in the cache number of sets grand=number of lines in each fix

Application of Cache Retention –

- Usually, the cache memory tin can store a reasonable number of blocks at any given time, just this number is pocket-sized compared to the full number of blocks in the main memory.

- The correspondence between the principal memory blocks and those in the cache is specified by a mapping function.

Types of Cache –

- Primary Cache –

A primary cache is always located on the processor chip. This enshroud is small and its access time is comparable to that of processor registers. - Secondary Cache –

Secondary cache is placed between the primary cache and the residue of the retentivity. It is referred to as the level 2 (L2) cache. Often, the Level 2 cache is also housed on the processor flake.

Locality of reference –

Since size of cache memory is less as compared to main memory. So to check which part of main memory should be given priority and loaded in cache is decided based on locality of reference.Types of Locality of reference

- Spatial Locality of reference

This says that there is a risk that element will be present in the shut proximity to the reference bespeak and next time if again searched then more close proximity to the point of reference. - Temporal Locality of reference

In this Least recently used algorithm will exist used. Whenever in that location is page fault occurs within a word will not only load word in main memory just complete page fault will be loaded because spatial locality of reference rule says that if you are referring any word next word will be referred in its register that's why we load complete page table and so the consummate block will be loaded.

GATE Practice Questions –

Que-1: A computer has a 256 KByte, four-manner set associative, write back information enshroud with the block size of 32 Bytes. The processor sends 32-bit addresses to the cache controller. Each cache tag directory entry contains, in add-on, to address tag, two valid $.25, ane modified bit and i replacement chip. The number of bits in the tag field of an address is

(A) eleven (B) 14 (C) xvi (D) 27

Answer: (C)

Caption: https://www.geeksforgeeks.org/gate-gate-cs-2012-question-54/

Que-2: Consider the data given in previous question. The size of the cache tag directory is

(A) 160 Kbits (B) 136 bits (C) 40 Kbits (D) 32 bits

Answer: (A)

Caption: https://world wide web.geeksforgeeks.org/gate-gate-cs-2012-question-55/

Que-three: An 8KB direct-mapped write-back enshroud is organized as multiple blocks, each of size 32-bytes. The processor generates 32-bit addresses. The cache controller maintains the tag data for each cache block comprising of the following.

1 Valid chip i Modified bit

As many bits as the minimum needed to identify the memory cake mapped in the enshroud. What is the total size of memory needed at the cache controller to store meta-data (tags) for the cache?

(A) 4864 bits (B) 6144 bits (C) 6656 bits (D) 5376 $.25

Answer: (D)

Caption: https://www.geeksforgeeks.org/gate-gate-cs-2011-question-43/

Article Contributed by Pooja Taneja and Vaishali Bhatia. Please write comments if yous detect anything incorrect, or you lot want to share more than data about the topic discussed above.

Source: https://www.geeksforgeeks.org/cache-memory-in-computer-organization/

0 Response to "what are the three mapping poilcies of memory to cache"

Post a Comment